End-to-End Computer Vision API, Part 2: Local Experiments

This is the second part of a three-part series of posts:

- _Part 1: [Data Versioning and ML

Pipelines](/blog/end-to-end-computer-vision-api-part-1-data-versioning-and-ml-pipelines)_

- Part 2: Local Experiments (this post)

- _Part 3: [Remote Experiments & CI/CD For

Machine Learning](/blog/end-to-end-computer-vision-api-part-3-remote-exp-ci-cd)_

In part 1 of this series of posts, we introduced a solution to a common problem faced by companies in the manufacturing industry: detecting defects from images of products moving along a production line. The solution we proposed was a Deep Learning-based image segmentation model wrapped in a web API. We talked about effective management and versioning of large datasets and the creation of reproducible ML pipelines.

Here we'll learn about experiment management: generation of many experiments by tweaking configurations and hyperparameters; comparison of experiments based on their performance metrics; and persistence of the most promising ones.

Introduction

Earlier, we built a pipeline that produces a trained Computer Vision model. Now we need a way to efficiently tune its configuration and the hyperparameters of the model. We want the ability to:

- Run many experiments and easily compare their results to pick the best-performing ones.

- Track the global history of the model's performance, and map each improvement to a particular change in code, configuration, or data.

- Zoom into the details of each training run to help us diagnose issues.

Experiment Management

Our DVC pipeline relies on the parameters defined in

theparams.yaml

file in this case (see other possible file types

here). By loading

its contents in each stage, we can avoid hard-coded parameters. It also allows

rerunning the whole or parts of our pipeline under a different set of

parameters. The DVC pipeline YAML file

dvc.yaml

supports a

templating format

to insert values from different sources in the YAML structure itself.

DVC tracks which stages of the pipeline experienced changes and only reruns those. By changes, we mean everything that might affect the predictive performance of your model like changes to the dataset, source code and/or parameters. This not only ensures complete reproducibility but often significantly reduces the time needed to rerun the whole pipeline while ensuring consistent results on every rerun. For example, at first, we started with a pixel accuracy metric (the percent of pixels in your image that are classified correctly). Later, we realized that it might not be the best metric to track (as described in this blog post), and we decided to include the Dice coefficient into our metrics. There is no reason for us to rerun the often time-consuming data preprocessing and model training stages if we want to incorporate these updates. DVC pipelines can skip the execution of these stages without our explicit instructions:

$ dvc exp run

Running stage 'check_packages':

> pipenv run pip freeze > requirements.txt

Stage 'data_load' didn't change, skipping

Stage 'data_split' didn't change, skipping

Stage 'train' didn't change, skipping

Running stage 'evaluate':

> python src/stages/eval.py --config=params.yaml

...There is a super convenient set of

Experiment Management

features that make switching between reproducible experiments very easy without

adding failed experiments to your git history. Check out this

blog post, which talks about

the idea of "ML Experiments as Code." That means treating experiments as you'd

treat code, that is, use git to track all changes in configs, metrics, and data

versions through text files. This approach removes the need for a separate

database/online service to store experiment metadata. If wanted to run a few

experiments with different scales of learning rate values (e.g. 0.1, 0.01

and 0.001), we'd do that as follows:

$ dvc exp run --set-param train.learning_rate=0.1

...

$ dvc exp run --set-param train.learning_rate=0.01

...

$ dvc exp run --set-param train.learning_rate=0.001

...Optionally, you can delay the execution of the experiments by putting them in a

queue,

and execute them later with the dvc exp run --run-all command.

These local experiments are powered by Git references, and you can learn about

them in this post. We can display all

experiments with the dvc exp show command:

$ dvc exp show --only-changed --sort-by=dice_mean──────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────

Experiment Created train.loss valid.loss foreground.acc jaccard.coeff dice.multi dice_mean acc_mean train.learning_rate train.batch_size models

──────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────

workspace - 0.10356 0.069076 0.90321 0.75906 0.92371 0.70612 0.97689 0.01 16 5854528

exp Apr 09, 2022 0.13305 0.087599 0.77803 0.66494 0.89084 0.70534 0.97891 0.01 8 6c513ae

├── 83a4975 [exp-2d80e] Apr 09, 2022 0.11189 0.088695 0.86905 0.75296 0.92005 0.70612 0.97689 0.01 16 5854528

├── 675efb3 [exp-6c274] Apr 09, 2022 0.10356 0.069076 0.90321 0.75906 0.92371 0.71492 0.98099 0.1 16 770745a

└── c8b1857 [exp-04bcd] Apr 09, 2022 0.11189 0.088695 0.86905 0.75296 0.92005 0.71619 0.98025 0.01 8 094c420

──────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────Once we identify one or a few best ones (e.g., highest dice_mean score), we

can

persist

them by creating a branch out of an experiment:

$ dvc exp branch exp-04bcd my-branch

Git branch 'my-branch' has been created from experiment 'exp-04bcd'.

To switch to the new branch run:

git checkout my-branchTo track detailed information about the training process, we integrated DVCLive into the training code by adding a callback object to the training function. DVCLive is a Python library for logging machine learning metrics and other metadata in simple file formats, which is fully compatible with DVC.

Collaboration and Reporting with Iterative Studio

What if we needed to report the results to our team members or maybe hand over the project to one of them? How do we communicate everything we did since the conception of the project? What things resulted in the most significant improvements? What things didn't seem to matter at all?

Iterative Studio is a web-based application with

seamless integration with DVC for data and model management, experiment

tracking, visualization, and automation. It becomes especially valuable when

collaborating with others on the same project or when there's a need to

summarize the progress of the project through metrics and plots. All that's

needed is to connect the project's repository with Studio. Then Studio will

automatically parse all required information from dvc.yaml, params.yaml, and

other text files that DVC recognizes. The result will be a repository view. The

view for our project is

here.

It displays commits, metrics, parameters, the remote location of data and models

tracked by DVC, and more.

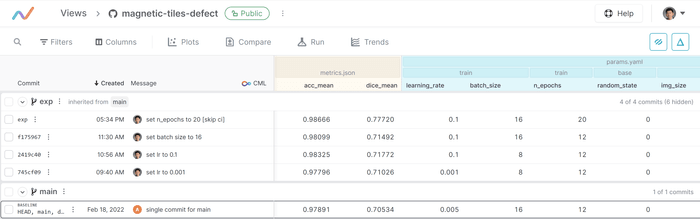

In the screenshot below, you can see that we created a separate exp branch

that displays the results of the local experiments that we decided to upload to

our remote repository, like trying different learning rates and batch sizes.

Note that earlier, we discarded all local experiments whose performance we

weren't satisfied with.

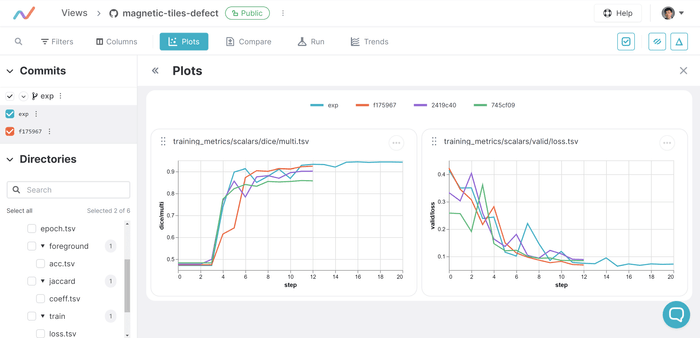

Below we can see the evolution of the key metrics and the value of the loss function throughout training (enabled by the earlier integration of DVCLive) for a set of selected commits.

Now, for example, if we see that the loss function hasn't reached a plateau after a certain number of epochs, we'll try increasing this number. Or, even worse, if we see the loss function growing over time, it'll be an indication that our learning rate may be too high. In this case, we may generate a few additional experiments with lower learning rate values, eventually picking the one that achieves good model performance after a reasonable number of training epochs.

Summary

In this post, we talked about the following:

- How to run and view ML experiments locally and commit the most promising ones to the remote git repository

- How the integration of Iterative Studio with DVC enables collaboration, traceability, and reporting on projects with multiple team members

- How DVCLive allows us to peek into the training process and helps us decide what ideas to try next

What if we don't have a machine with a powerful GPU, and we'd like to take advantage of our cloud infrastructure? What if we'd like to have a custom report (with metrics, plots, and other visuals) accompany every commit/pull request on GitHub? The third (and last) part of this series of posts will demonstrate how another open-source tool from the Iterative ecosystem, CML, addresses these issues.