End-to-End Computer Vision API, Part 1: Data Versioning and ML Pipelines

This is the first part of a three-part series of posts:

- Part 1: Data Versioning and ML Pipelines (this post)

- _Part 2: [Local

Experiments](/blog/end-to-end-computer-vision-api-part-2-local-experiments)_

- _Part 3: [Remote Experiments & CI/CD For

Machine Learning](/blog/end-to-end-computer-vision-api-part-3-remote-exp-ci-cd)_

Lately, training a well-performing Computer Vision (CV) model in Jupyter

Notebooks became fairly straightforward. You can use pre-trained Deep Learning

models and high-level libraries like fastai, keras, pytorch-lightning

etc., that abstract away much of the complexity. However, it's still hard to

incorporate these models into a maintainable production application in a way

that brings value to the customers and business.

Below we'll present the tools that naturally integrate with your git repository and makes this part of the process significantly easier.

Introduction

In this series of posts, we'll describe an approach that streamlines the lifecycles stages of a typical Computer Vision project going from proof-of-concept to configuration and parameter tuning to, finally, deployment to the production environment.

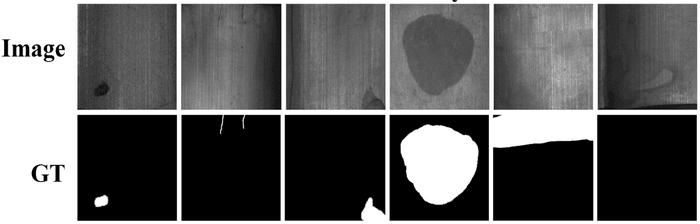

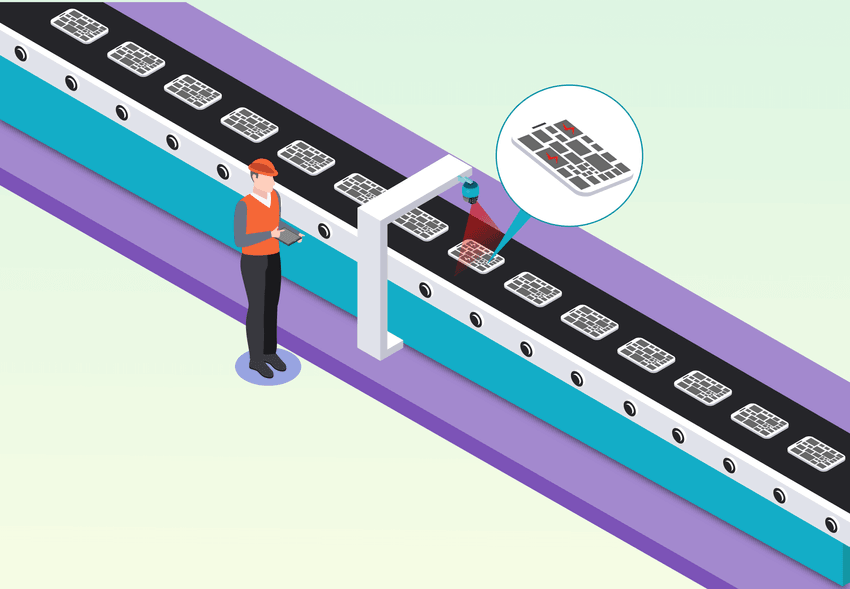

Automatic defect detection is a common problem encountered in many industries, especially manufacturing. A typical setup would include a conveyor belt that moves some products along the production line and a camera installed above the conveyor. The camera takes pictures of the products moving below and connects to a computer that controls it. This computer needs to send raw images to some defect detection service, receive information about the location and size of the defects, if any, and may even control what happens to a defective product by being connected to a robotic arm via a PLC (programmable logic controller).

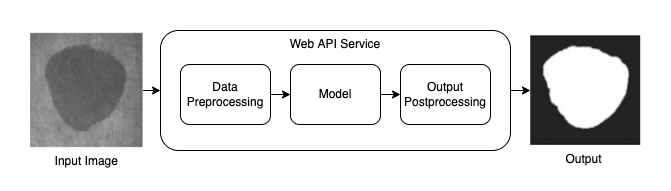

As our demo project, we've selected a very common deployment pattern for this setup: a CV model wrapped in a web API service. Specifically, we'll perform an image segmentation task on a magnetic tiles dataset first introduced in this paper and available in this GitHub repository.

- This post (part 1) introduces the concepts of data versioning and ML pipelines as they apply to Computer Vision projects.

- Part 2 will focus on experiment tracking and management - key components needed for effective collaboration between team members.

- In part 3, you’ll learn how to easily move your model training workloads from a local machine to cloud infrastructure and set up proper CI/CD workflows for ML projects.

Target Audience

We assume the target audience of this post to be technical folks who are familiar with the general Computer Vision concepts, CI/CD processes, and Cloud infrastructure. Familiarity with the Iterative ecosystem of tools such as DVC, CML, and Studio is not required but would help with understanding the nuances of our solution.

Summary of the Solution

All the code for the project is stored in this GitHub repository.

The CV API solution that we are proposing can be summarized in the following steps:

- Client service will submit the image to our API endpoint

- The image will be preprocessed to adhere to the specifications that our model expects

- The CV model will ingest the processed image and output its prediction image mask

- Some postprocessing will be applied to the image mask

- A reply back to the client with the output mask

The repository also contains code for the web application itself, which can be

found in the

app

directory. While the web application is very simple, its implementation is

beyond the scope of this blog post. In short, we can say that it's based on the

FastAPI library, and we deploy it to the

Heroku platform through a Docker container defined in this

Dockerfile.

Prerequisites for Reproduction

Feel free to fork the repository if you'd like to replicate our steps and deploy your own API service. Keep in mind that you'll need to set up and configure the following:

- GitHub account and GitHub application token

pipenvinstalled locally- AWS account, access keys, and an S3 bucket

- Heroku account and Heroku API key

For security reasons, you'll need to set up all keys and tokens through GitHub secrets. You'll also need to change the remote location (and its name) in the DVC config file for versioning data and other artifacts.

Proof-of-Concept in Jupyter Notebooks

A typical ML project would start with data collection and/or labeling, but we are skipping all this hard work because it was done for us by the researchers who published the dataset.

We'll get right to the exciting part of training CV models in Jupyter notebooks which you can find here. In short, there we have three notebooks:

1_ProcessData.ipynbdownloads, processes, and organizes the data for easy loading into the training process later2_TrainSegmentationModel.ipynbusesfastaiDeep Learning framework to train an image segmentation model3_Evaluate.ipynbcomputes model performance on the test dataset

Jupyter Notebook is by far the most popular tool for quick exploratory work when

it comes to data analysis and modeling. However, it's not without

its own limitations. One of the

biggest issues of Jupyter is that it has no guardrails to ensure

reproducibility, e.g. hidden states of variables and objects as well as the

possibility to run cells out of order. While there are several projects that

attempt to alleviate some of these issues (notably,

nodebook,

papermill,

nbdime,

nbval,

nbstripout, and

nbQA), they don’t solve them completely.

That's where the concepts of data versioning and ML pipelines come in.

Data Versioning

In most ML projects, training data changes gradually over time as new training instances (images in our case) get added while older ones might be removed. Simply creating snapshots of our training data at the time of training (e.g. labeling data directories with dates) quickly becomes unsustainable since these snapshots will contain many duplicates. Additionally, tracking which data directory was used to train each model becomes hard to manage very fast; and linking data versions and models to their respective code versions complicates things even further.

A much better approach is to:

-

track only the deltas between different versions of the datasets; and

-

have the project’s git repository store only the reference links to the data while the actual data is stored in a remote storage

This is exactly what we can do with DVC by running only a couple of DVC commands. In turn, DVC handles all the underlying complexity of managing data versions, performing file deduplication, pushing and pulling to/from different remote storage solutions and more.

Check out this tutorial to learn more about data and model versioning with DVC.

In this project, AWS S3 is our remote storage configured in the

.dvc/config

file. In other words, we store the images in an AWS bucket while only keeping

references to those files in our git repository.

Refactoring Jupyter code into an ML pipeline

Another powerful set of DVC features is ML pipelines. An ML pipeline is a way to codify and automate the workflow used to reproduce a machine learning model. A pipeline consists of a sequence of stages.

First, we did some refactoring of our Jupyter code into individual and self-contained modules:

data_load.pydownloads raw data locallydata_split.pysplits data into train and test subsetstrain.pyusesfastailibrary to train a UNet model with a ResNet-34 encoder and saves it into a pickle fileeval.pyevaluates the model's performance on the test subset

Specific execution commands, dependencies, and outputs of each stage are defined

in the pipeline file

dvc.yaml

(more about pipelines files

here).

We've also added an optional

check_packages

stage that freezes the environment into a requirements.txt file containing all

python packages and their versions installed in the environment. We enabled the

always_changed

field in the configuration of this stage to ensure DVC reruns this stage every

time. All other stages have this text file as a dependency. Thus, the entire

pipeline will be rerun if anything about our python environment changes.

We can see the whole dependency graph (directed acyclic graph, to be exact)

using the dvc dag command:

$ dvc dag

+----------------+

| check_packages |

*****+----------------+

***** * ** **

**** ** ** ***

*** ** ** ***

+-----------+ ** * ***

| data_load | ** * *

+-----------+ ** * *

*** ** * *

* ** * *

** * * *

+------------+ * *

| data_split |*** * *

+------------+ *** * *

* *** * *

* *** * *

* ** * *

** +-------+ ***

*** | train | ***

*** +-------+ ***

*** ** ***

*** ** ***

** ***

+----------+

| evaluate |

+----------+The entire pipeline can be easily reproduced with the dvc exp run command:

$ dvc exp run

Running stage 'check_packages':

> python src/stages/check_pkgs.py --config=params.yaml

...

Running stage 'data_load':

> python src/stages/data_load.py --config=params.yaml

...

Running stage 'data_split':

> python src/stages/data_split.py --config=params.yaml

...

Running stage 'train':

> python src/stages/train.py --config=params.yaml

...

Running stage 'evaluate':

> python src/stages/eval.py --config=params.yaml

...Summary

In this first part of the blog post, we talked about the following:

- Common difficulties when building Computer Vision Web API for defect detection

- Pros and cons of exploratory work in Jupyter Notebooks

- Versioning data in remote storage with DVC

- Moving and refactoring the code from Jupyter Notebooks into DVC pipeline stages

In the second part, we’ll see how to get the most out of experiment tracking and management by seamlessly integrating DVC, DVCLive, and Iterative Studio.