The DVC 3.0 Stack: Beyond the Command Line

DVC has brought engineering best practices to the ML and data world, making model development more standardized and reproducible. Now we want to make it work when the command line isn't the right fit, and it's easier to work in code, an IDE, or on the web. This doesn't mean we forgot about DVC fundamentals — data versioning is the core of what we do.

DVC Stack

DVC 3.0 helps you experiment, from notebook exploration to model management, and works smarter with your cloud/remote storage to make data versioning painless.

Experiment Tracking and Beyond

In DVC 2.0, we first released DVC experiments, providing a way to track experiments as hidden, lightweight Git commits, so you don't have to separately manage your experiments and code. Now it's easier to start tracking experiments from your Python script or notebook (see examples). You only need a Git repo and DVC's Python logging library DVCLive. You don't need prior DVC knowledge or an existing DVC project.

from dvclive.lightning import DVCLiveLogger

...

trainer = Trainer(logger=DVCLiveLogger(save_dvc_exp=True))

trainer.fit(model)from dvclive.huggingface import DVCLiveCallback

...

trainer.add_callback(DVCLiveCallback(save_dvc_exp=True))

trainer.train()from dvclive.keras import DVCLiveCallback

...

model.fit(

train_dataset, validation_data=validation_dataset,

callbacks=[DVCLiveCallback(save_dvc_exp=True)])from dvclive import Live

with Live(save_dvc_exp=True) as live:

live.log_param("epochs", NUM_EPOCHS)

for epoch in range(NUM_EPOCHS):

train_model(...)

metrics = evaluate_model(...)

for metric_name, value in metrics.items():

live.log_metric(metric_name, value)

live.next_step()With the DVC extension for VS Code, you get an experiment tracking workbench without any servers or logins. Your experiments are also available in our collaboration hub Studio and connected to your Git repo automatically, so you can share, review and merge like you would with code. You can work locally when you want and use Studio to share if and when it suits you, just like in Git.

Model Management

With the Studio Model Registry, you can use DVC to manage your entire model lifecycle inside your Git workflow, from creating the model to deploying it in any deployment system. Our ethos for model management is consistent with everything else we do - It's all about integrating with your existing stack and tools, and empowering you to tie your workflows around GitOps principles and automation.

Cloud Experiments (Alpha Release)

When we released DVC 2.0, we also launched the cml runner

command to run continuous integration (CI) on your own cloud instances so you

could automate large ML jobs. Cloud experiments build on this technology without

CI, meaning less setup (you can configure directly in Studio). With the alpha

release of Studio Cloud Experiments, you can run DVC experiments on your own

cloud infrastructure in a few clicks, including with GPU and spot instance

support.

Hyperparameter Optimization

DVC can also help you do hyperparameter optimization by integrating with other tools. You can queue an entire grid search of experiments, configure multiple complex model architectures with Hydra integration, and track your Optuna studies.

Smarter Cloud/Remote Storage

We are committed to building the best data versioning experience. This means making DVC work with your existing data stack and not trying to replace it. We have focused on working more closely with cloud storage (and non-cloud storage) by making DVC not only faster but smarter.

Minimizing Downloads

Avoiding unnecessary downloads saves time and space that could never be accomplished by transfer speedups alone. You can now add or modify individual files in a larger dataset. If you have a large dataset in remote storage, you can pull and modify any file without needing to download the full dataset.

You can also run or verify a pipeline without pulling data first. You can skip downloading data for stages that haven't changed and automatically download only the data needed for stages that have changed.

Cloud Versioning

You shouldn't have to create extra copies of data that's already backed up and versioned on the cloud. DVC cloud versioning enables you to import data that's already versioned by your cloud provider. In the example below, DVC knows not to push any data to its own storage because it is already versioned by the cloud. Pulling the data later will recover it from its original source location.

$ dvc import-url --version-aware s3://mybucket/data

Importing 's3://mybucket/data' -> 'data'

$ dvc push

Everything is up to date.Pythonic API

You may need to work with your cloud data outside of the command-line workflow

of pushing and pulling. The DVCFileSystem API enables you to read and manage

files and directories from remote DVC repos like you would for a local

filesystem. In the example below, each file in the data/prepared directory is

streamed in as text.

>>> from dvc.api import DVCFileSystem

>>> url = "https://github.com/iterative/example-get-started.git"

>>> fs = DVCFileSystem(url, rev="main")

>>> for f in fs.find("data/prepared"):

... text = fs.read_text(f)

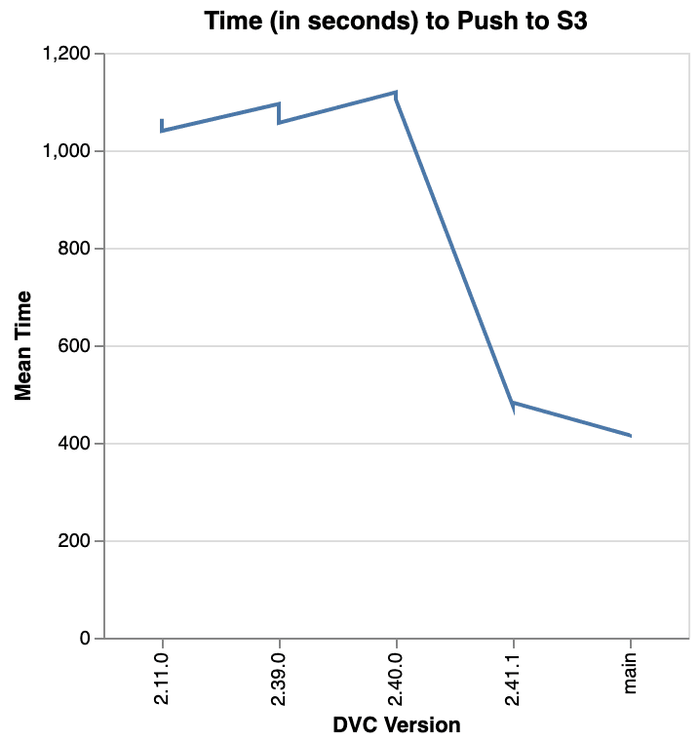

... # process the dataFaster Performance

Sometimes you just need faster performance, especially for large data downloads and uploads. We have focused on improving performance where it matters most. For example, pushing data to S3 is 2.5x faster in DVC 3.0 than in early versions of DVC 2.x according to our benchmarks.

Thank You!

Our constant interaction with the DVC community gives us feedback on what should be improved. We heard from you that the ML landscape is already complex and you want to keep your tools simple. That's why many of the new "features" are improvements to existing functionality, and why we are building this stack of tools to make DVC easier, more flexible, and the solid choice for your MLOps workflows.

Finally, none of these improvements would be possible without the support of the teams who work on the entire DVC stack.

Thanks to all of you who make DVC and its community what it is!

Get Started with the DVC 3.0 Stack

Get started with DVC 3.0 or the other tools in the DVC stack: